The colored lines are the debugging output from my script.

It is about 400 lines, could be shorter, and uses openCV for canny edge detect, hough transform and graphic display.

It uses imagemagick, but currently by calling sobelseries.bat and convert.exe through the shell. I want to use the imagemagick python interface instead, but do not know when I get around to that.

I did not implement my own hough transform, but I probably should, because the opencv one is lousy. No working Non-Maximum-Supression, and no way to access the accumulator values for each line, just their order. No simple way to use orientation information for hough transform either.

The algorithm for selecting the best quadrangle was created by me, and is a bit hacked, but it seems to work. Basically I sort the lines by strength, take the strongest detected line, and keep adding lines that satisfy all quadrangle criteria

(like, all quadrangle sides must be longer than X, all corners must be >30°, must be convex)

There are currently no sanity checks for command line paramters, and in case of any error it simply crashes.

Only rudimentary comments, and the code is a mess.

The quadrangle detection and the preprocessing could be improoved a lot.

Aspect ratio recognition has not been iintegrated, and currently it simply maps all rectangles to 1682x2378 pixels (DIN A4)

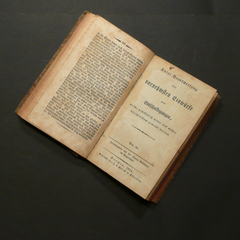

But it worked for all the photographed pages I threw at it.

I want to make some of these changes and then make an official release, but I do not know how soon I get around to that.

Might be a while, since the original reason I made this script was the thousands of pages I photographed, and I still have to read them all.

Code: Select all

#!/usr/bin/python

# This program is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# (at your option) any later version.

# This program is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <http://www.gnu.org/licenses/>.

#

################################################################

#

# This is a standalone program.

# it requires

# - python 2.6+ ( 2.5 or 2.4 works probably too)

# - openCV with PythonInterface installed

# - imagemgagick with convert somewhere in the path (only required for batch processing)

# - sobelseries.bat in the same directory or somewhere in the path (only required for batch processing)

#

################################################################

#

# USAGE:

#

# 1) DISPLAY

# houghlines.py IMAGEFILE

# use hough transform to detect lines in the image,

# identify most prominent perspective-distorted rectangle,

# display results on screen, wait for keypress

#

# 2) BATCH PROCESSING

# houghlines.py INFILE OUTFILE

# use sobelseries.bat to filter nonrelevant edges, (NEEDS SOBELSERIES.BAT)

# identify most prominent rectangle,

# correct perspective in INFILE, write results to OUTFILE (NEEDS IMAGEMAGICK)

import sys

import subprocess

import os

from math import sin,cos,sqrt,degrees,radians

from opencv.cv import *

from opencv.highgui import *

# Find point (x,y) where two parameterized lines intersect

# lines are given i the hough form (rho,theta)

# returrns None for parallel lines

def intersectLines2(r1, t1, r2, t2) :

ct1=cos(t1)

st1=sin(t1)

ct2=cos(t2)

st2=sin(t2)

d=ct1*st2-st1*ct2

if abs(d)>0.000001 :

x = ((st2*r1-st1*r2)/d)

y = ((-ct2*r1+ct1*r2)/d)

return (x,y)

else: #lines are parallel

return None

def intersectLines(line1,line2):

return intersectLines2(line1[0],line1[1],line2[0],line2[1])

#returns true if the point is within the given axis-aligned rectangle. all points are tuples

def pointInRect(point,rect):

topLeft,bottomRight = rect

if point[0]>=topLeft[0] and point[1]>=topLeft[1] and point[0]<=bottomRight[0] and point[1]<=bottomRight[1]:

return True

else: return False

def angledist(theta1,theta2):

return abs( (theta1 + CV_PI/2 - theta2) % CV_PI - CV_PI/2 )

# print degrees(angledist(radians(0),radians(0))),"=0"

# print degrees(angledist(radians(0),radians(90))),"=90"

# print degrees(angledist(radians(0),radians(180))),"=0"

# print degrees(angledist(radians(0),radians(270))),"=90"

# print degrees(angledist(radians(0),radians(360))),"=0"

# print degrees(angledist(radians(0),radians(179))),"=1"

# print degrees(angledist(radians(0),radians(181))),"=1"

# print degrees(angledist(radians(180),radians(0))),"=0"

# print degrees(angledist(radians(180),radians(90))),"=90"

# print degrees(angledist(radians(180),radians(180))),"=0"

# print degrees(angledist(radians(180),radians(270))),"=90"

# print degrees(angledist(radians(180),radians(360))),"=0"

# print degrees(angledist(radians(180),radians(-1))),"=1"

# print degrees(angledist(radians(180),radians(1))),"=1"

# print degrees(angledist(radians(-180),radians(0))),"=0"

# print degrees(angledist(radians(-180),radians(90))),"=90"

# print degrees(angledist(radians(-180),radians(180))),"=0"

# print degrees(angledist(radians(-180),radians(270))),"=90"

# print degrees(angledist(radians(-180),radians(360))),"=0"

# print degrees(angledist(radians(-180),radians(-1))),"=1"

# print degrees(angledist(radians(-180),radians(1))),"=1"

def angle_deg(line1,line2):

return angledist(line1[1] ,line2[1]) / CV_PI * 180

#this does not work in some cases

def rhodist(rho1,rho2):

#return abs( abs(rho1)-abs(rho2) )

return abs( rho1-rho2 )

def pointdist(p1,p2):

return sqrt((p1[0]-p2[0])**2 + (p1[1]-p2[1])**2)

# not used anymore

def sortQuadranglePoints2(pointlist):

"""

a-------b

| |

| |

c-------d

"""

# sort 4 points so that

# a = from the leftmost points, the topmost

# b = from the topmost points, the leftmost

# d = from the rightmost points the topmost

# c = last point

points = pointlist[0:4]

points.sort()

a = points.pop(0)

points.sort(key=lambda p: (p[1],p[0]))

b = points.pop(0)

points.sort(key=lambda p: (-p[0],p[1]))

d = points.pop(0)

c = points.pop(0)

print " %1.4f,%1.4f 0,0 %1.4f,%1.4f 1000,0 %1.4f,%1.4f 1000,1000 %1.4f,%1.4f 0,1000 " % (a[0],a[1],b[0],b[1],d[0],d[1],c[0],c[1])

return a,b,c,d

def sortQuadranglePoints(pointlist):

"""

a-------b

| |

| |

c-------d

"""

# sort 4 points so that

# a = topleftmost point

# b = from the remaining points, the toprightmost point

# d = from the remaining points, the bottomrightmost point

# c = last point

points = list(pointlist[0:4])

points.sort(key=lambda p: (p[0]+p[1],p[0]))

a = points.pop(0)

points.sort(key=lambda p: (-p[0]+p[1],p[1]))

b = points.pop(0)

points.sort(key=lambda p: (-p[0]-p[1],-p[0]))

d = points.pop(0)

c = points.pop(0)

return a,b,c,d

# picks a rectangel from an ordered list of points

# algorithm:

# - start with the first line

# - keep adding lines that satisfy rectangle criteria

# returns the 4 corner points or None

def pickQuadrangle(houghlines,width,height):

"""

a-------b b-------a

| | | |

| | | |

c-------d d-------c

"""

# at the end the corner points will be ordered abdc clockwise or counterclockwise

a,b,c,d = None,None,None,None # corner points

vanish1,vanish2 = None,None # vanishing points

ab,ac,bd,cd = None,None,None,None # lines

mindist =min (width,height) / 6 # minimum lengt of one side

bigrect =(-width/4,-height/4),(width*1.25,height*1.25) # the 4 corners have to be in this area

smallrect=(width/4,height/4),(width*0.75,height*0.75) # the 2 vanishing points have to be outside this area

lines = houghlines[:]

for dummy in 0, :

#pick the strongest line as ab

if len(lines)>0:

ab = lines.pop(0)

print "found ab", ab

else:

break

for line in lines:

# pick line ~perpendicular to first line (angle + pointinrect):

ac = line

if angle_deg (ab , ac) < 45 : continue

a = intersectLines (ab , ac)

if not a or not pointInRect (a , bigrect) : continue

print "found ac", ac

break

else:

ac = None; a = None

break

lines.remove(ac)

for line in lines:

# pick line ~perpendicular to either line

bd = line

if angle_deg (ac , bd) > 30 : (ab,ac) = (ac,ab)

if angle_deg (ab , bd) < 30 : continue

b = intersectLines (ab , bd)

if not b or not pointInRect (b , bigrect) : continue

if pointdist (b , a) < mindist : continue

vanish1 = intersectLines (ac , bd)

# vanishing point should be None or far away

if vanish1 and pointInRect (vanish1 , smallrect) : continue

print "found bd", bd

break

else:

bd = None; b = None; vanish1 = None

break

lines.remove(bd)

for line in lines:

# pick line perpendicular to ac AND bd:

cd = line

if angle_deg (ac , cd) < 30 : continue

if angle_deg (bd , cd) < 30 : continue

c = intersectLines (cd , ac)

if not c or not pointInRect (c , bigrect) : continue

if pointdist (c , a) < mindist : continue

if pointdist (c , b) < mindist : continue

d = intersectLines (cd , bd)

if not d or not pointInRect (d , bigrect) : continue

if pointdist (d , a) < mindist : continue

if pointdist (d , b) < mindist : continue

if pointdist (d , c) < mindist : continue

vanish2 = intersectLines (cd , ab)

# vanishing point should be None or far away

if vanish2 and pointInRect( vanish2 , smallrect) : continue

print "found cd", cd

break

else:

cd=None; c=None; d=None; vanish2=None

break

lines.remove(cd)

else: return (a,b,c,d)

print "NOT ENOUGH LINES"

return None

#convert s a openCv data structure into a proper python list of tupples

def convertCVList(linesCV):

lines=[]

for line in linesCV: lines.append((float(line[0]),float(line[1])))

return lines

# check if a line is similar to some other lines

def distinctLine(line,goodlines,min_rho_dist,min_theta_dist):

rho2,theta2 = line

for rho1,theta1 in goodlines:

if rhodist(rho1,rho2)<min_rho_dist and angledist(theta1,theta2)<min_theta_dist:

return False

return True

# from an ordered list of lines , remove all lines that are "very similar" to an earlier line.

# makeshift Non-Maximum-Supression

def filterLines(lines,min_rho_dist,min_theta_dist):

goodlines=[]

for line in lines:

if distinctLine(line,goodlines,min_rho_dist,min_theta_dist): goodlines.append(line)

return goodlines

if __name__ == "__main__":

filename = "testhough.jpg"

if len(sys.argv)>1:

filename = sys.argv[1]

if len(sys.argv)>2:

cmdline = "sobelseries.bat",sys.argv[1],"tmpsobel.png"

try: os.remove("tmpsobel.png")

except Exception: pass

print "> ",cmdline

subprocess.check_call(cmdline,shell=True)

filename = "tmpsobel.png"

src=cvLoadImage(filename, 0);

if not src:

print "Error opening image %s" % filename

sys.exit(-1)

dst = cvCreateImage( cvGetSize(src), 8, 1 );

color_dst = cvCreateImage( cvGetSize(src), 8, 3 );

color_dst_small = cvCreateImage( cvSize(min(src.width,1000),min(src.height,900)), 8, 3 );

storage = cvCreateMemStorage(0);

#cvCanny( src, dst, 20, 50, 3 );

cvCanny( src, dst, 50, 200, 3 );

#cvCanny( src, dst, 100, 1000, 3 );

#dst=src

#lines = cvHoughLines2( dst, storage, CV_HOUGH_STANDARD, 1, CV_PI/180, 100, 0, 0 );

#lines = cvHoughLines2( dst, storage, CV_HOUGH_MULTI_SCALE, 10, CV_PI/180, 100, 10, 11 );

linesCV = cvHoughLines2( dst, storage, CV_HOUGH_STANDARD, 1, CV_PI/180 , 50, 0, 0 );

lines=convertCVList(linesCV)

min_rho_dist=100

min_theta_dist=radians(10)

goodlines=filterLines(lines,min_rho_dist,min_theta_dist)

quadranglePoints = pickQuadrangle(lines,src.width,src.height)

if quadranglePoints:

a,b,c,d = sortQuadranglePoints(quadranglePoints)

print a,b,c,d

if pointdist(a,b) + pointdist(c,d) > pointdist(a,c) + pointdist(b,d):

#landscape

perspectivestring = "%1.2f,%1.2f 1682,0 %1.2f,%1.2f 1682,2378 %1.2f,%1.2f 0,0 %1.2f,%1.2f 0,2378" % (a[0],a[1],b[0],b[1],c[0],c[1],d[0],d[1])

else:

perspectivestring = "%1.2f,%1.2f 0,0 %1.2f,%1.2f 1682,0 %1.2f,%1.2f 0,2378 %1.2f,%1.2f 1682,2378" % (a[0],a[1],b[0],b[1],c[0],c[1],d[0],d[1])

print perspectivestring

if len(sys.argv)>2:

cmdline = "convert",sys.argv[1],"-set option:distort:viewport 1682x2378","-distort perspective \"" + perspectivestring + "\"",sys.argv[2]

print "> ", cmdline

subprocess.check_call( " ".join(cmdline),shell=True)

if len(sys.argv)<>3:

cvCvtColor( dst, color_dst, CV_GRAY2BGR );

for i in range(min(len(goodlines), 100)-1, -1, -1):

line = goodlines[i]

#print line[0], round(line[1]/CV_PI*180)

rho = line[0];

theta = line[1];

pt1 = CvPoint();

pt2 = CvPoint();

a = cos(theta);

b = sin(theta);

x0 = a*rho

y0 = b*rho

pt1.x = cvRound(x0 + 5000*(-b));

pt1.y = cvRound(y0 + 5000*(a));

pt2.x = cvRound(x0 - 5000*(-b));

pt2.y = cvRound(y0 - 5000*(a));

linecolor=CV_RGB(255-min(255*i/10,200),0,255*i/10)

cvLine( color_dst, pt1, pt2, linecolor, 4, 8 );

def makeCvPoint(point):

cvpoint = CvPoint()

cvpoint.x = cvRound(point[0])

cvpoint.y = cvRound(point[1])

return cvpoint

if quadranglePoints:

a,b,c,d = sortQuadranglePoints(quadranglePoints)

cvCircle (color_dst, makeCvPoint(a), 20, CV_RGB(255,0,0), -1)

cvCircle (color_dst, makeCvPoint(b), 20, CV_RGB(255,255,0), -1)

cvCircle (color_dst, makeCvPoint(c), 20, CV_RGB(0,255,0), -1)

cvCircle (color_dst, makeCvPoint(d), 20, CV_RGB(0,0,255), -1)

for x1,y1 in quadranglePoints:

for x2,y2 in quadranglePoints:

if x1!=x2 or y1!=y2:

pt1 = CvPoint()

pt2 = CvPoint()

pt1.x=cvRound(x1)

pt1.y=cvRound(y1)

pt2.x=cvRound(x2)

pt2.y=cvRound(y2)

cvLine( color_dst, pt1, pt2, CV_RGB(0,255,255), 2, 8 );

else:

#lines = cvHoughLines2( dst, storage, CV_HOUGH_PROBABILISTIC, 1, CV_PI/180, 50, 40, 5 );

lines = cvHoughLines2( dst, storage, CV_HOUGH_PROBABILISTIC, 1, CV_PI/180, 10, 50, 1000 );

cvCvtColor( dst, color_dst, CV_GRAY2BGR );

for i in range(min(lines.total, 10)-1, -1, -1):

line = lines[i]

linecolor=CV_RGB(255-min(255*i/10,200),0,255*i/10)

cvLine( color_dst, line[0], line[1], linecolor, 5, 8 );

cvNamedWindow( "Source", 1 );

cvShowImage( "Source", src );

cvResize ( color_dst, color_dst_small)

cvNamedWindow( "Hough", 1 );

cvShowImage( "Hough", color_dst_small );

cvWaitKey(0);